- English

- Indonesia

Published by I Putu Arka Suryawan at Thu May 22 2025

When I first sat in front of a computer in the early 2000s, writing my first lines of code in Clipper for DOS applications, I never imagined I'd one day be architecting AI systems that transform entire business operations. Yet here I am, more than two decades later, having witnessed and adapted to one of the most remarkable periods of technological evolution in human history.

My journey began in January 1981, born into a family where education and entrepreneurship were deeply valued. By the time I earned my Bachelor's degree in Informatics Engineering in the early 2000s, the tech world was already buzzing with possibilities, but my first real taste of programming was surprisingly old-school.

Clipper on DOS – that's where it all started. Those green-on-black screens, the satisfaction of seeing text appear exactly where you commanded it, and the pure logic of making a machine do exactly what you told it to do. There was something beautifully straightforward about those early days. No fancy interfaces, no drag-and-drop – just you, the code, and the immediate feedback of success or failure.

Looking back, I'm grateful for starting in that era. It taught me the fundamentals that many developers today might skip over. When you're working with limited memory and processing power, every line of code matters. You learn to think efficiently, to solve problems with elegant simplicity rather than brute force.

The mid-2000s brought waves of change that would have overwhelmed anyone not ready to adapt. Windows-based applications were becoming the norm, web development was exploding, and suddenly everyone was talking about this thing called "the internet" as if it would change everything.

Spoiler alert: it did.

I remember the internal struggle many of my peers faced. Do we stick with what we know, or do we dive headfirst into these new technologies? For me, the choice was clear, though not always easy. I chose to embrace change as a constant companion rather than fight it.

This decision shaped my entire career philosophy: technology is a tool, not a destination. Whether I was learning new programming languages, understanding database architectures, or later diving into cloud platforms, I always kept one eye on the fundamentals and another on the horizon.

The transition to web development felt like stepping into a completely different universe. Suddenly, applications weren't just running on one machine – they were connecting people across the globe. The implications were staggering, and honestly, a bit overwhelming at first.

I spent countless nights learning HTML, CSS, JavaScript, and server-side technologies. Each new concept felt like unlocking a door to possibilities I hadn't even imagined. The web wasn't just about displaying information; it was about creating experiences, building communities, and solving problems on a scale that desktop applications simply couldn't match.

What struck me most during this period was how quickly everything moved. In the DOS era, you might learn a technology and use it for years. In web development, frameworks were evolving monthly. This taught me perhaps the most valuable lesson of my career: the ability to learn continuously is more important than any single technology you master.

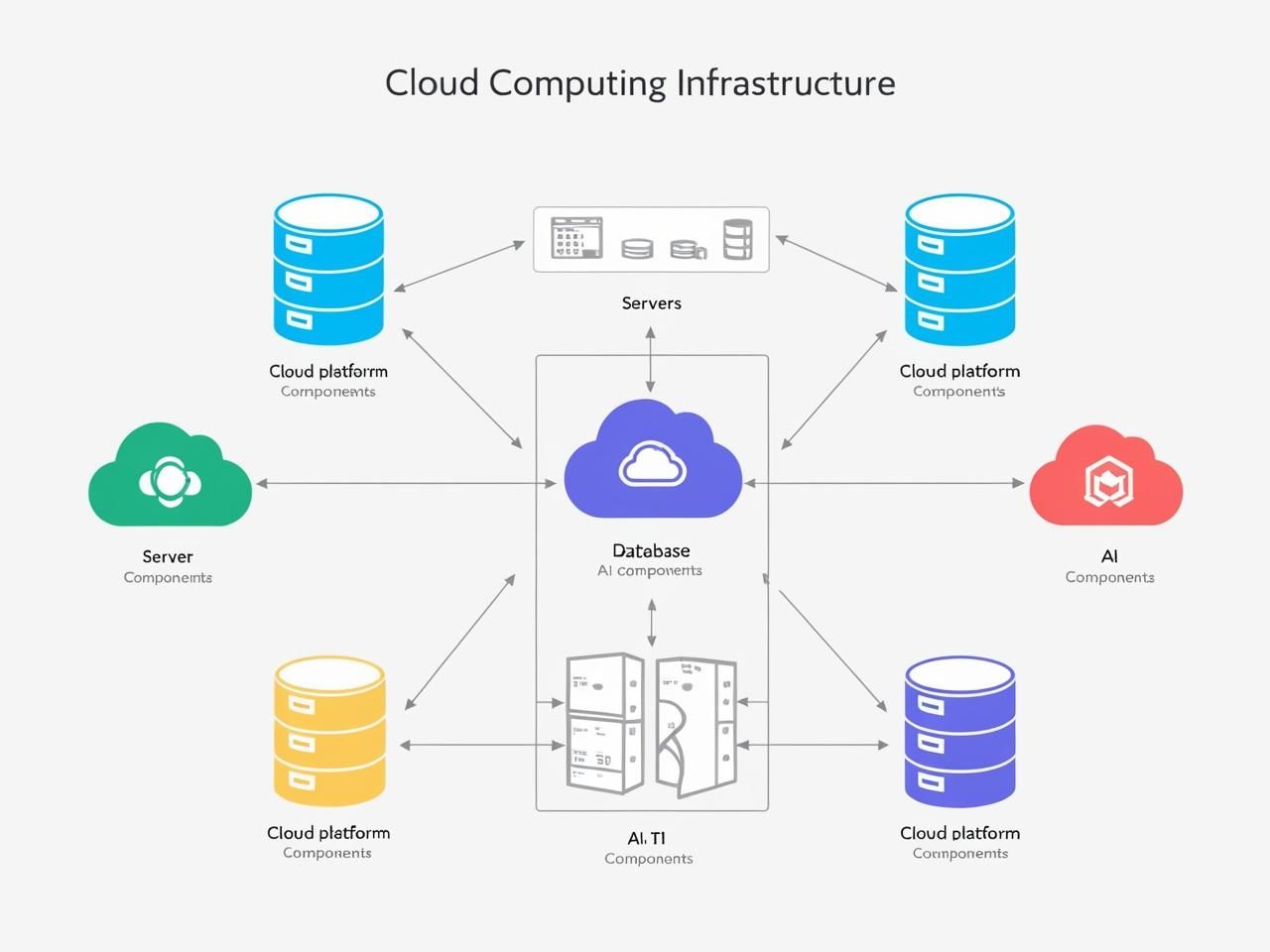

When cloud computing started gaining traction in the late 2000s and early 2010s, I witnessed what might be the most fundamental shift in how we think about infrastructure and software deployment. Suddenly, the constraints of physical hardware became nearly irrelevant.

I remember my first experience with cloud platforms – the awe of spinning up servers in minutes rather than waiting weeks for hardware procurement. The scalability, the global reach, the pay-as-you-use model – it was revolutionary. But more than the technical capabilities, cloud computing changed how we approach problem-solving itself.

Projects that would have been impossible for small teams or startups suddenly became feasible. Geographic boundaries became irrelevant. The democratization of powerful computing resources meant that good ideas could flourish regardless of the size of your IT budget.

Adapting to cloud technologies required not just learning new tools, but fundamentally rethink how we architect solutions. Microservices, containerization, serverless computing – each concept required letting go of old assumptions and embracing new paradigms.

Since 2023, I've focused intensively on artificial intelligence technologies, and I can honestly say this feels like the most transformative shift I've experienced in my entire career. The potential of AI to augment human capabilities, automate complex processes, and generate insights from data is unprecedented.

Working with AI has taught me that we're not just building software anymore – we're creating systems that can learn, adapt, and even surprise us with their capabilities. The businesses I've helped implement AI solutions have seen dramatic improvements in efficiency, customer experience, and decision-making capabilities.

But perhaps what excites me most about AI is how it brings us full circle to those fundamental programming principles I learned in the DOS era. At its core, AI is still about logic, problem-solving, and making systems do what we need them to do – just at a scale and sophistication that would have been unimaginable twenty years ago.

Looking back on this journey, several key insights stand out:

Technology is cyclical, but principles are eternal. The specific tools change, but good software design, efficient problem-solving, and user-focused thinking remain constant. Those fundamentals I learned writing Clipper code still guide how I approach AI architecture today.

Embrace the learning curve. Every major technological shift comes with a steep learning curve. Instead of seeing this as an obstacle, I've learned to view it as an opportunity. The steeper the curve, the greater the competitive advantage for those willing to climb it.

Bridge the old with the new. My experience with legacy systems has proven invaluable when helping businesses modernize. Understanding where we came from helps us make better decisions about where we're going.

Focus on solving real problems. Whether it's a DOS application managing inventory or an AI system optimizing supply chains, the best technology solutions are those that address genuine human needs. The tools evolve, but the mission remains the same.

Never stop being curious. The moment you think you've learned enough is the moment you start becoming obsolete. The tech industry rewards those who maintain a beginner's mind, regardless of their experience level.

As I look toward the future, I'm more excited than ever about the possibilities ahead. Quantum computing is on the horizon, AI continues to evolve at breakneck speed, and new paradigms are emerging that we can barely imagine today.

What gives me confidence about navigating whatever comes next is the same thing that helped me transition from DOS to web to cloud to AI: the principles remain constant even as the tools evolve. Good problem-solving, continuous learning, and a focus on creating value for real people – these will never go out of style.

To fellow developers who might be feeling overwhelmed by the pace of change, I offer this perspective: every generation of developers has felt like they were living through unprecedented technological shifts. The secret isn't to master every new technology that emerges, but to develop the skills and mindset that allow you to adapt and thrive regardless of what comes next.

The journey from DOS to cloud has been incredible, but I have a feeling the best is yet to come.