- English

- Indonesia

Published by I Putu Arka Suryawan at Thu May 22 2025

If you've been programming for more than two decades like I have, you've witnessed something remarkable: the complete transformation of how we think about, write, and organize code. My journey from writing Clipper applications in the DOS era to architecting .NET solutions today has taught me that while programming languages evolve dramatically, the fundamental principles of good software development remain surprisingly constant.

Today, I want to share the lessons I've learned about programming paradigms, developer mindset shifts, and the universal truths about software development that transcend any specific technology.

Let me take you back to the early 2000s, when Clipper ruled the business application world. For those who never experienced it, Clipper was a programming language primarily used for creating database applications on DOS systems. It was procedural, straightforward, and brutally honest about what it could and couldn't do.

Writing Clipper code was like building with LEGO blocks – each piece had a clear purpose, and you assembled them in a logical sequence to create something functional. There was no hiding behind abstractions or frameworks. If your program didn't work, it was usually because your logic was flawed, not because some mysterious framework behavior was interfering.

clipper// Simple Clipper database operation USE CUSTOMER LOCATE FOR NAME = "John Smith" IF FOUND() REPLACE BALANCE WITH BALANCE + 100 ? "Customer balance updated" ELSE ? "Customer not found" ENDIF

This simplicity taught me several fundamental lessons that I still apply today:

Directness breeds understanding. When every line of code does exactly what it says, debugging becomes a logical exercise rather than a detective story. This taught me to value code clarity over cleverness – a principle that serves me well regardless of the technology stack.

Resource constraints force creativity. Clipper applications had to be efficient because system resources were limited. You couldn't solve performance problems by throwing more memory at them. This constraint taught me to think carefully about algorithms and data structures from the beginning, rather than optimizing as an afterthought.

Database-first thinking. Most Clipper applications were built around database operations. This gave me a deep appreciation for data modeling and the understanding that good software often starts with good data design – something that remains true whether you're building a simple CRUD app or a complex microservices architecture.

The transition from Clipper's procedural approach to object-oriented programming was my first major paradigm shift, and honestly, it nearly broke my brain.

In Clipper, you had data and functions that operated on that data. Simple. In object-oriented programming, suddenly data and functions were combined into objects that could interact with each other in complex ways. The first time someone explained inheritance, polymorphism, and encapsulation to me, I wondered if they were making it unnecessarily complicated.

But as I dove deeper into languages like C++ and later C#, I began to understand the power of this approach. Object-oriented programming wasn't just a different way to organize code – it was a different way to think about problems.

Modeling the real world. OOP taught me to think about software as a collection of entities that mirror real-world relationships. A Customer object has properties and behaviors, just like a real customer. This made complex business logic more intuitive to design and maintain.

Code reusability became practical. While you could create reusable functions in Clipper, OOP made code reuse much more natural. Inheritance meant you could build on existing work without starting from scratch, and polymorphism meant you could write code that worked with different types of objects without knowing their specific implementation.

Encapsulation changed debugging. The ability to hide internal implementation details behind clean interfaces made large applications much more manageable. When something went wrong, you had a much clearer idea of where to look because the boundaries between different parts of the system were well-defined.

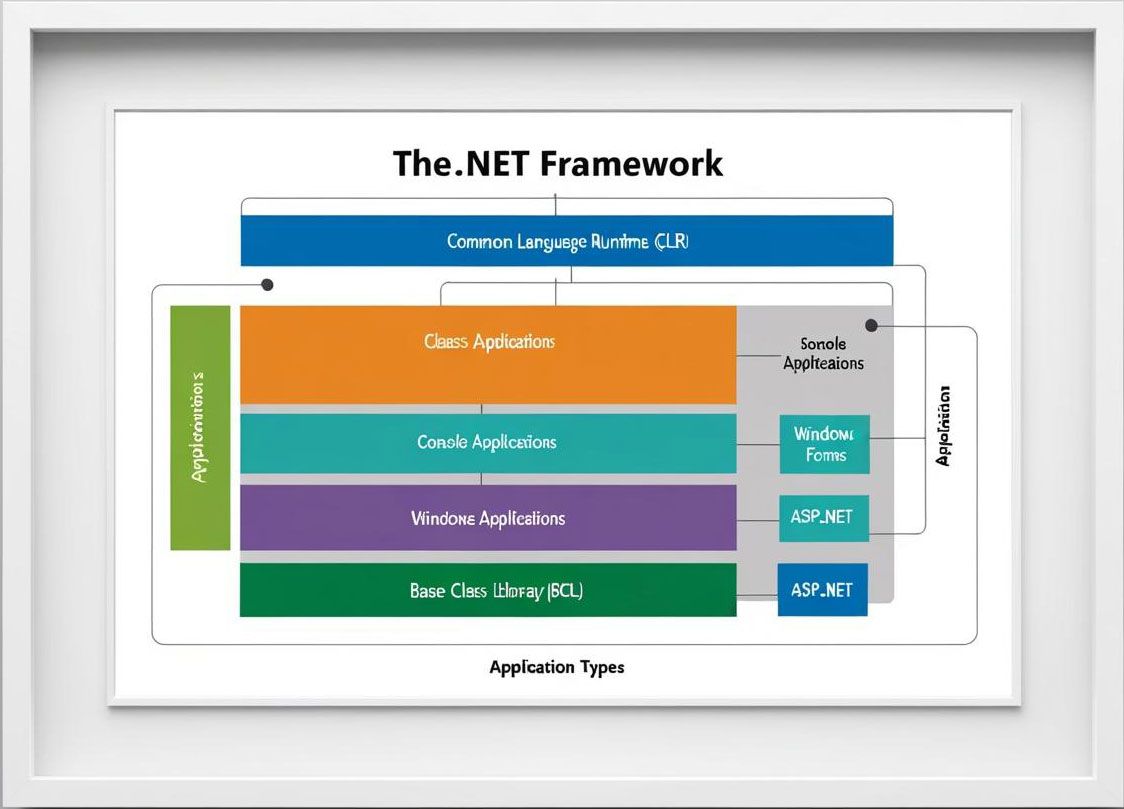

When .NET arrived in the early 2000s, it represented another massive shift in how we approach software development. This wasn't just a new programming language – it was an entirely new way of thinking about applications, libraries, and the relationship between code and the underlying system.

The .NET Framework introduced concepts that seemed almost magical compared to what I was used to:

Automatic memory management. No more worrying about memory leaks or explicitly freeing resources. The garbage collector handled it all. This was liberating but also slightly terrifying – I had less control, but I could focus more on business logic.

Rich base class library. Instead of writing everything from scratch or hunting for third-party libraries, .NET provided a comprehensive set of pre-built functionality. Need to work with XML? There's a namespace for that. Need to handle HTTP requests? Built right in.

Language interoperability. The ability to use multiple languages within the same application opened up new possibilities for team collaboration and leveraging existing expertise.

But perhaps the most significant change was the shift from writing applications to composing applications. In the Clipper days, you wrote most of your functionality from scratch. In .NET, you spend more time understanding and combining existing components than writing new code.

After decades of moving between different programming paradigms, I've identified several universal principles that apply regardless of the technology:

Whether you're writing a 50-line Clipper script or a enterprise .NET application with thousands of classes, complexity is always your biggest enemy. The most maintainable code I've written has always been the simplest code that solves the problem effectively.

In Clipper, simplicity was forced by the limitations of the environment. In .NET, you have to choose simplicity deliberately, which is actually much harder. The framework gives you so many options that it's easy to over-engineer solutions.

This lesson became clear to me in Clipper, where the database structure essentially determined how your application would be organized, and it remains true in modern .NET applications. Whether you're designing classes, microservices, or database schemas, getting the data model right is usually the key to everything else falling into place.

Every programming paradigm introduces some level of abstraction. Clipper abstracted away the complexities of file system operations. OOP abstracts implementation details behind interfaces. .NET abstracts platform-specific details behind the runtime.

The key lesson I've learned is that you need to understand what's happening at the layer below your abstraction. You don't need to be an expert, but you need enough understanding to make informed decisions and debug problems when they arise.

Testing a Clipper application meant running it and checking if the output was correct. Testing an object-oriented application introduced unit testing and mocking. Testing in .NET brought integration testing, dependency injection for testability, and continuous integration pipelines.

But the core principle remains the same: you need to verify that your code does what you think it does, and you need to be able to verify this quickly and repeatedly.

Regardless of the programming paradigm, software is written by humans for humans. The most elegant technical solution is useless if your team can't understand and maintain it, or if it doesn't solve real user problems.

This lesson has become more important as frameworks have become more powerful. It's easy to get caught up in the technical possibilities and forget that someone else will need to maintain your code long after you've moved on to the next project.

The evolution from Clipper to .NET required several fundamental shifts in how I think about programming:

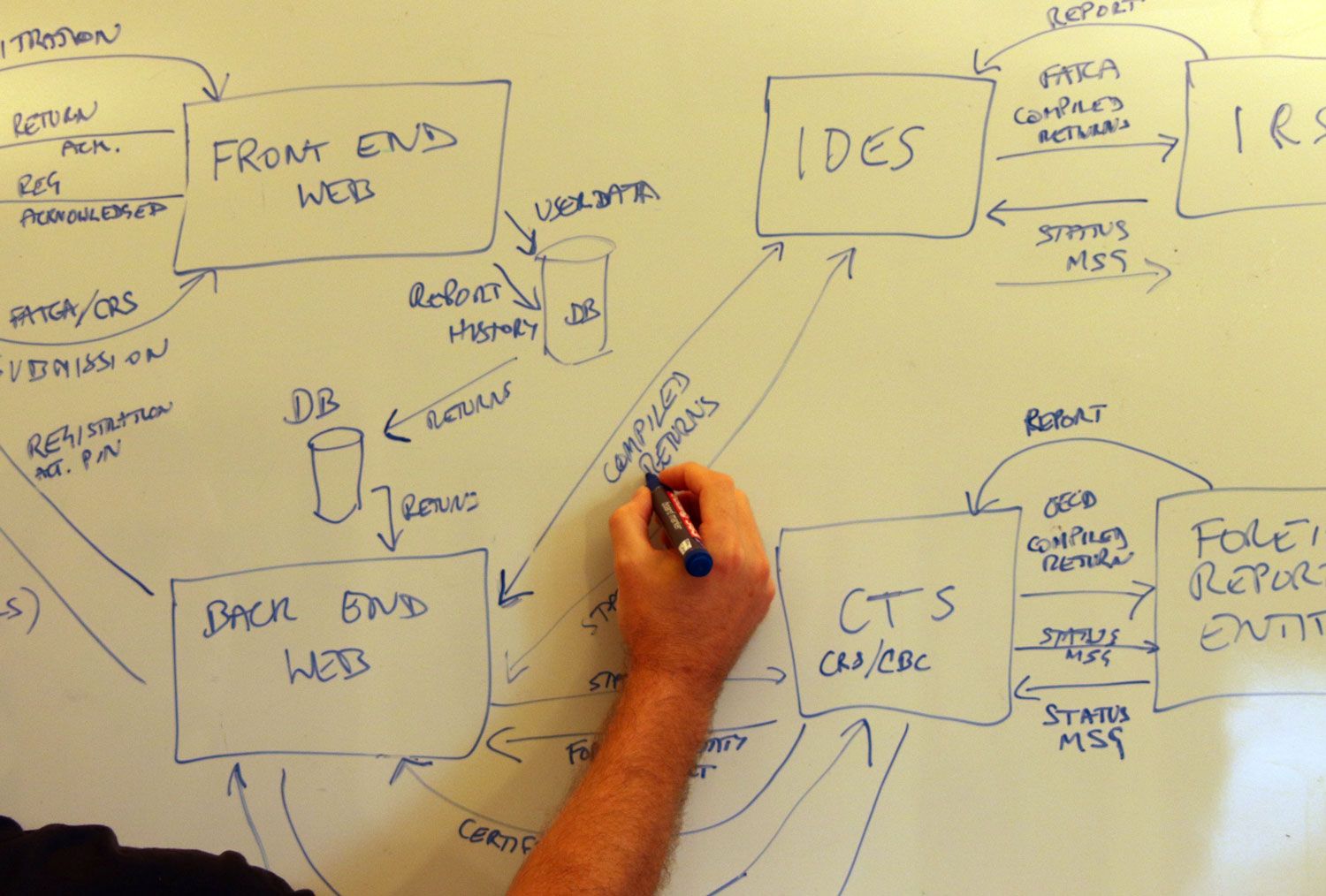

Clipper programs were largely linear – you started at the beginning and proceeded step by step to the end. Modern .NET applications are layered, with presentation layers, business logic layers, data access layers, and service layers all interacting in complex ways.

This shift required learning to think architecturally rather than just algorithmically. It's not enough to solve the immediate problem; you need to consider how your solution fits into the larger system and how it will need to evolve over time.

In the Clipper era, if you needed functionality, you built it. In the .NET world, if you need functionality, you first check if someone has already built it, then you configure it to meet your needs.

This shift has made developers more productive but has also required new skills in evaluating, integrating, and configuring third-party components. Sometimes the hardest part of solving a problem is choosing between the dozen different libraries that claim to solve it.

Modern .NET development, especially with frameworks like Entity Framework and ASP.NET Core, often involves describing what you want rather than specifying exactly how to achieve it. LINQ queries, for example, let you describe the data you want without worrying about the specific steps to retrieve it.

This declarative approach is powerful but requires a different kind of trust in the underlying framework. You need to understand the framework well enough to know when your declarative code will perform well and when you need to drop down to more explicit control.

Despite all these paradigm shifts, some aspects of good programming have remained remarkably constant:

Clear naming matters. Whether you're naming a Clipper variable or a .NET class, descriptive names make code self-documenting and maintainable.

Small, focused units are better than large, monolithic ones. This was true for Clipper functions, OOP methods, and .NET microservices.

Error handling is crucial. The specific mechanisms have evolved from simple error codes to exception handling to modern Result patterns, but the need to handle failure cases gracefully hasn't changed.

Performance still matters. The specific bottlenecks have changed – from disk I/O in Clipper to network latency in distributed .NET applications – but the need to understand and optimize performance remains.

Documentation saves lives. Whether it's comments in Clipper code or XML documentation in .NET assemblies, future maintainers (including yourself) will thank you for explaining not just what the code does, but why it does it.

As I look toward the future of programming – with AI-assisted development, quantum computing, and paradigms we haven't even imagined yet – I'm confident that some lessons will continue to apply:

The fundamental challenges of software development – understanding requirements, managing complexity, ensuring reliability, and creating maintainable solutions – will remain constant even as our tools continue to evolve.

The specific syntax and frameworks we use will continue to change, but the underlying principles of good software design, the importance of clear communication, and the need to balance technical excellence with practical constraints will endure.

For developers who are just starting their careers or are in the middle of their own paradigm shifts, here's what I wish I could tell my younger self:

Embrace the discomfort of learning new paradigms. Each shift in thinking makes you a better programmer, even when it's frustrating in the moment.

Don't abandon old lessons when learning new technologies. The fundamentals you learn early in your career will serve you throughout your journey, even if they need to be adapted to new contexts.

Focus on principles over syntax. Programming languages come and go, but good design principles, problem-solving skills, and the ability to learn new things quickly will never go out of style.

Build things. The best way to understand any programming paradigm is to use it to solve real problems. Don't just read about it – write code, make mistakes, and learn from them.

The journey from Clipper to .NET has taught me that programming is not just about learning new syntax or mastering new frameworks – it's about continuously evolving your thinking while holding onto the timeless principles that make software truly great.